I got my hands on an Azure Kinect here recently and have been having some fun building up a use case for tracking engagement of people at exhibits in a room. Currently, though the SDK is only in C. I am not great at C/C++ so I built a minimum viable setup to get the position data of the bodies from the Kinect into JSON and pushed to an Azure Function.

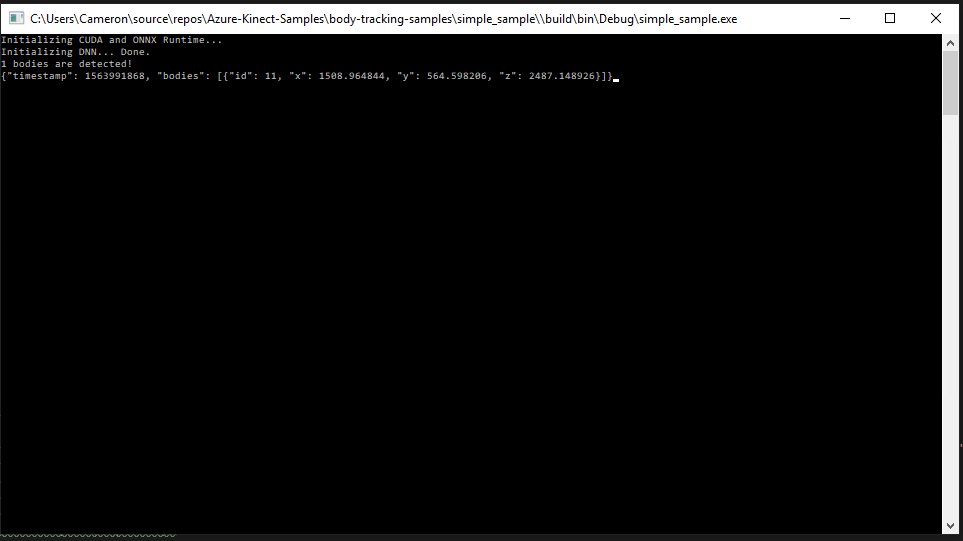

First and foremost, the end result. When running you will see the JSON output to the window and the current body count. You can get your hands on the source control over on GitHub.

Thought Process

A few items around some decisions I made while building this.

- I am not good at C++ I am sorry about the code. I used the first library I found in my less than excellent searching.

- I just track the Pelvis location as the center mass of a body.

- I haven’t nailed down the sample speed yet so I default to every second.

- I needed a hefty machine to make this work right (GeForce 980 Ti).

Learning

There were some headaches around getting the development environment set up. You need the full CUDA SDK in place at a specific version. That install is finicky and changes your video card drivers. This overall is a frustration.

I also needed to bring my gaming machine in to develop for the device as I needed a dedicated Nvidia GPU.

At this point, the primary goal is to get the data up to the cloud and work from there. This is a quick way to get the data and move forward.

End Result

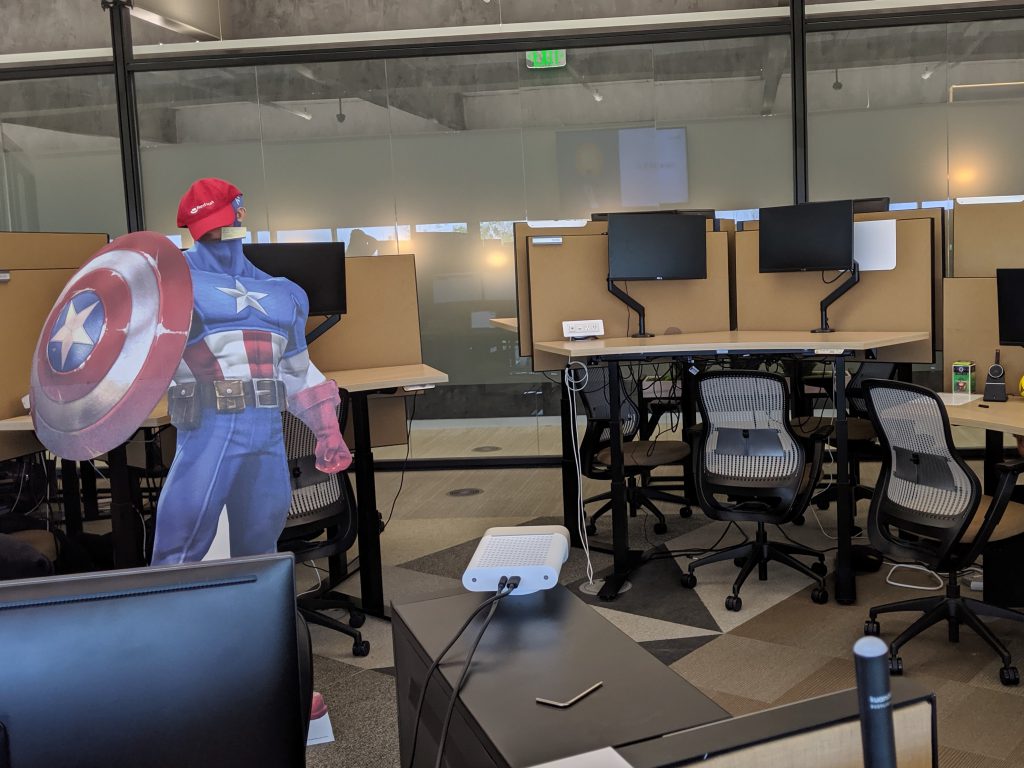

With the data dumping up to the cloud now I was able to just push it over to blob storage as a JSON object. You can view that visualized on this website where I am just drawing the position data to a canvas (which isn't running anymore). Currently, we are testing this out with a small area around our cubes. We have a very inconspicuous cardboard cutout that we are using as a person stand-in…